Kubernetes is a popular portable, extensible, open-source platform for container orchestration. It is for the management of applications build-out of multiple, largely self-contain runtimes known as containers. Containers have become increasingly popular since the Docker containerization project launched in 2013. But large, distributed containerized applications can become increasingly difficult to coordinate. Moreover, by making containerized applications dramatically easier to manage at scale, it has become a key part of the container revolution.

The name Kubernetes originates from Greek, meaning helmsman or pilot. Google open-sourced the Kubernetes project in 2014. It combines more than 15 years of Google’s experience running production workloads at scale with best-of-breed ideas and practices from the community. Google generates more than 2 billion container deployments in a week. All powered by its internal platform, Borg. While Borg was the predecessor to Kubernetes, and the lessons learned from developing Borg over the years became the primary influence behind much of Kubernetes technology.

Kubernetes clusters can span hosts across on-premise, public, private, or hybrid clouds. For this reason, it is an ideal platform for hosting cloud-native applications that require rapid scaling, like real-time data streaming through Apache Kafka.

What is Kubernetes?

Kubernetes is an open-source project that has become one of the most popular container orchestration tools around. It allows you to deploy and manage multi-container applications at scale. While in practice it is most often used with Docker. Docker is the most popular containerization platform. It can also work with any container system that conforms to the Open Container Initiative (OCI) standards for container image formats and runtimes.

Since it is open-source, with relatively few restrictions on how it can be used, so it can be used freely by anyone who wants to run containers. Additionally anywhere they want to run them on-premises, in the public cloud, or both.

Why you need it?

Containers are a good way to bundle and run the applications. While in a production environment, you need to manage the containers that run the applications and ensure that there is no downtime. If a container goes down, another container needs to start. This behavior can become easier if it was handled by a system.

Likewise, Kubernetes comes to the rescue. However, Kubernetes provides you with a framework to run distributed systems resiliently. It takes care of scaling and failover for your application. It also provides deployment patterns and more. For example, it can easily manage a canary deployment for your system.

What you can do with kubernetes?

Firstly the advantage of using it in your environment is that it gives you the platform to schedule and run containers on clusters of virtual machines (VMs). It is especially for optimizing app dev for the cloud.

More broadly, it helps to fully implement and rely on a container-based infrastructure in production environments. And because it is all about automation of operational tasks. You can do many of the same things other application platforms or management systems let you do, but for your containers.

Kubernetes provides with:

- Storage Orchestration: It allows you to automatically mount a storage system of your choice, such as local storage, public cloud providers, and more.

- Automatic rollouts and rollbacks: You can automate it to create new containers for your deployment, remove existing containers, and adopt all their resources to the new container.

- Automatic bin packing: Provide it with a cluster of nodes that it can use to run containerized tasks. You need to define how much CPU and memory (RAM) each container needs. It can fit containers onto nodes to make the best use of your resources.

- Service discovery and load balancing: It can expose a container using the DNS name or using its own IP address. If traffic to a container is high, it is able to load balance and distribute the network traffic so that the deployment is stable.

- Self-healing: It restarts containers that fail. Replaces containers and kills the containers that don’t respond to your user-defined health check. It doesn’t advertise them to clients until they are ready to serve.

What is docker?

Docker can be used as a container runtime that Kubernetes orchestrates. When it schedules a pod to a node, the kubelet on that node will instruct Docker to launch the specified containers.

The kubelet then continuously collects the status of those containers from Docker and aggregates that information in the master. Docker pulls containers onto that node and starts and stops those containers.

Kubernetes vs Docker

Kubernetes doesn’t replace Docker but augments it. However, it does replace some of the higher-level technologies that have emerged around Docker.

The difference when using Kubernetes with Docker is that an automated system asks Docker to do those things instead of the admin doing so manually on all nodes for all containers.

One such technology is Docker Swarm, an orchestrator bundled with Docker. Docker Swarm can be used instead of Kubernetes, but Docker Inc. has chosen to make it part of the Docker Community and Docker Enterprise editions going forward.

Kubernetes is significantly more complex than Docker Swarm, and requires more work to deploy. The work is intended to provide a big payoff in the long run a more manageable, resilient application infrastructure. For development work, and smaller container clusters, Docker Swarm presents a simpler choice.

Concepts to learn architecture

Kubernetes clusters

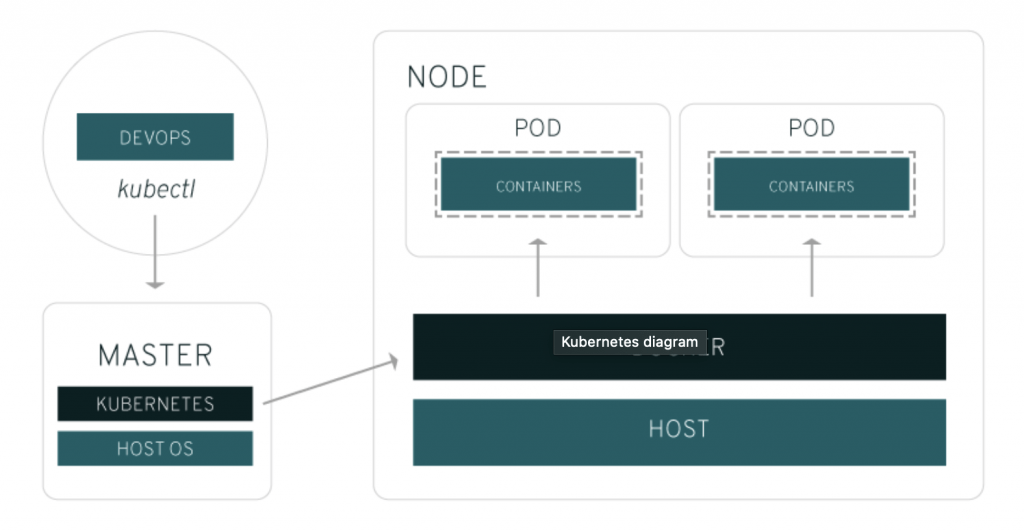

The highest-level Kubernetes abstraction is the cluster. It refers to the group of machines running it and the containers managed by it. A Kubernetes cluster must have a master, the system that commands and controls all the other machines in the cluster. A highly available Kubernetes cluster replicates the master’s facilities across multiple machines. But only one master at a time runs the job scheduler and controller-manager.

A Kubernetes cluster should be:

- Secure: It should follow the latest security best-practices.

- Easy to use: It should be operable using a few simple commands.

- Extendable: It shouldn’t favor one provider and should be customizable from a configuration file.

Kubernetes nodes and pods

Each cluster contains Kubernetes nodes. Nodes might be physical machines or VMs. It even makes it possible to ensure that certain containers run only on VMs or only on bare metal.

Nodes run pods, the most basic Kubernetes objects that can be created or managed. Each pod represents a single instance of an application or running process in Kubernetes and consists of one or more containers. It starts, stops, and replicates all containers in a pod as a group. Pods keep the user’s attention on the application, rather than on the containers themselves.

Pods are created and destroyed on nodes as needed to conform to the desired state specified by the user in the pod definition. It provides an abstraction called a controller for dealing with the logistics of how pods are spun up, rolled out, and spun down. Controllers come in a few different flavors depending on the kind of application being managed.

Kubernetes dashboard

One Kubernetes component that helps you keep on top of all of these other components is Dashboard, a web-based UI with which you can deploy and troubleshoot apps and manage cluster resources. The dashboard isn’t installed by default, but it is not difficult to install it.

Kubernetes services

The pods live and die as needed, we need a different abstraction for dealing with the application lifecycle. An application is supposed to be a persistent entity. Even when the pods running the containers that comprise the application aren’t themselves persistent. To that end, it provides an abstraction called a service.

A service in it describes how a given group of pods can be accessed via the network. As the documentation puts it, the pods that constitute the back-end of an application might change. But the front-end shouldn’t have to know about that or track it. Services make this possible.

Kubernetes ingress

Kubernetes has several components that facilitate this with varying degrees of simplicity and robustness, including NodePort and LoadBalancer. But the component with the most flexibility is Ingress. Ingress is an API that manages external access to a cluster’s services, typically via HTTP. Ingress requires configuration to set up properly.

How does Kubernetes work?

A working Kubernetes deployment is called a cluster. You can visualize a Kubernetes cluster as two parts: the control plane, which consists of the master node or nodes, and the compute machines or worker nodes.

Worker nodes run pods, which are made up of containers. Each node is its own environment and could be either a physical or virtual machine. Worker nodes run the applications and workloads.

It runs on top of an operating system and interacts with pods of containers running on the nodes.

The master node is responsible for maintaining the desired state of the cluster, such as which applications are running and which container images they use. The Kubernetes master node takes the commands from an administrator (or DevOps team) and relays those instructions to the subservient nodes.

This handoff works with a multitude of services to automatically decide which node is the best for the task. It then allocates resources and assigns the pods in that node to fulfill the requested work.

From an infrastructure point of view, there is little change to how you manage containers. The control over containers just happens at a higher level, giving better control without the need to micromanage each separate container or node.

Some work is necessary, but it’s mostly a matter of assigning a Kubernetes master, defining nodes, and defining pods.

It runs on bare metal servers, virtual machines, public cloud providers, private clouds, and hybrid cloud environments. One of Kubernetes’ key advantages is it works on many different kinds of infrastructure.

Learn to speak Kubernetes

Some of the common terms to help you better understand it:

- Master: The machine that controls Kubernetes nodes. This is where all task assignments originate.

- Node: These machines perform the requested, assigned tasks. The Kubernetes master controls them.

- Pod: A group of one or more containers deployed to a single node. All containers in a pod share an IP address, IPC, hostname, and other resources. Pods abstract network and storage from the underlying container. This lets you move containers around the cluster more easily.

- Replication controller: This controls how many identical copies of a pod should be running somewhere on the cluster.

- Service: This decouples work definitions from the pods. It service proxies automatically get service requests to the right pod no matter where it moves in the cluster or even if need to replace.

- Kubelet: This service runs on nodes and reads the container manifests. It also ensures that the containers are running.

- kubectl: The command-line configuration tool for Kubernetes.

Where to get Kubernetes?

It is available in many forms from open-source bits to commercially back distribution to public cloud service. The best way to figure out where to get it is by use case. You can also learn about the documentation to download Kubernetes.

- If you’re doing it all alone: The source code, and pre-built binaries for most common platforms. You can get It from the GitHub repository.

- If you’re using Docker Community or Docker Enterprise: Docker’s most recent editions come with Kubernetes as a pack-in. This is the easiest way for container mavens to get a leg up with Kubernetes.

- If you’re deploying on-prem or in a private cloud: Chances are good that any infrastructure you choose for your private cloud has Kubernetes built-in. Standard-issue, certified, supported Kubernetes distributions are available from dozens of vendors including Canonical, IBM, Mesosphere, Mirantis, Oracle, Pivotal, Red Hat, Suse, VMware, and many more.

- If you’re deploying in a public cloud: The three major public cloud vendors all offer it as a service. Google Cloud Platform offers Google Kubernetes Engine. Microsoft Azure offers the Azure Kubernetes Service. And Amazon has added it to its existing Elastic Container Service. Managed services are also available from IBM, Nutanix, Oracle, Pivotal, Platform9, Rancher Labs, Red Hat, VMware, and many other vendors.

Kubernetes Certification

If you feel like you have a good handle on how it works and you want to be able to demonstrate your expertise to employers, you might want to check out the pair of Kubernetes-related certifications offered jointly by the Linux Foundation and the Cloud Native Computing Foundation:

Certified Kubernetes Administrator: They seek to provide assurance that CKAs have the skills, knowledge, and competency to perform the responsibilities of Kubernetes administrators. It includes application lifecycle management, installation, configuration, validation, cluster maintenance, and troubleshooting.

Certified Kubernetes Application Developer: It certifies that users can design, build, configure, and expose cloud-native applications for Kubernetes.”

The certification exams are $300 each. There are also accompanying training courses. It serves as a good, structured way to learn more about it for those who have interests.

Kubernetes on AWS

Kubernetes is open-source software. It allows you to deploy and manage containerized applications at scale. Correspondingly It manages clusters of Amazon EC2 compute instances and runs containers on those instances with processes for deployment, maintenance, and scaling. Using it, you can run any type of containerized applications using the same toolset on-premises and in the cloud.

AWS makes it easy to run Kubernetes in the cloud with scalable and highly-available virtual machine infrastructure, community-backed service integrations, and Amazon Elastic Kubernetes Service (EKS), a certified conformant, managed Kubernetes service.

You can do certain things using Kubernetes on AWS:

- Firstly, you can fully manage your Kubernetes deployment. Provision and run it on your choice of powerful instance types.

- Secondly, you can run it without needing to provision or manage master instances.

- Lastly, you can store, encrypt, and manage container images for fast deployment.

How you can use it on AWS?

AWS makes it easy to run Kubernetes. You can choose to manage Kubernetes infrastructure yourself with Amazon EC2 or get an automatically provisioned, managed control plane with Amazon EKS. Either way, you get powerful, community-backed integrations to AWS services like VPC, IAM, and service discovery as well as the security, scalability, and high-availability of AWS.

There are two main ways to use it on AWS, run it yourself on Amazon EC2 virtual machine instances, or use the Amazon EKS service. You can learn more about running it yourself on EC2 in our Github workshop. You can learn more about using Amazon EKS on the product page.

Advantages

Kubernetes manages app health, replication, load balancing, and hardware resource allocation for you. Apps that become “unhealthy,” or don’t conform to the definition of health you describe for them, can be automatically healed. Another benefit it provides is maximizing the use of hardware resources including memory, storage I/O, and network bandwidth.

Kubernetes eases the deployment of preconfigured applications with Helm charts. You can create your own Helm charts from scratch, and you might have to if you’re building a custom app to deploy internally. Package managers such as Debian Linux’s APT and Python’s Pip save users the trouble of manually installing and configuring an application. This is especially handy when an application has multiple external dependencies.

Kubernetes simplifies the management of storage, secrets, and other application-related resources. It provides abstractions to allow containers and apps to deal with storage in the same decoupled way as other resources. It has its own mechanism for natively handling secrets, although it does need configuration with care.

Kubernetes applications can run in hybrid and multi-cloud environments. Federation uses to create highly available or fault-tolerant deployments, whether or not you’re spanning multiple cloud environments.

I hope you liked our article on The Kubernetes. So, do click “Add Your Comment” below. If you’d like to contact cybercrip’s editors directly, send us a message.